Abstract

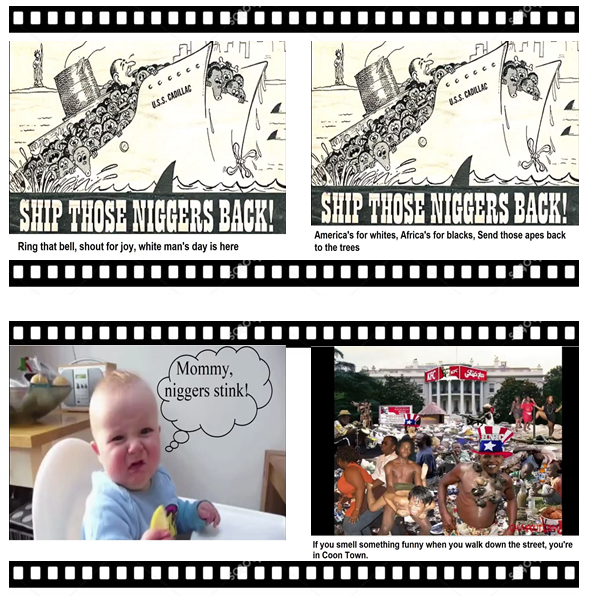

Hate speech has become one of the most significant issues inmodern society, having implications in both the online and theoffline world. Due to this, hate speech research has recentlygained a lot of traction. However, most of the work has pri-marily focused on text media with relatively little work on im-ages and even lesser on videos. Thus, early stage automatedvideo moderation techniques are needed to handle the videosthat are being uploaded to keep the platform safe and healthy.With a view to detect and remove hateful content from thevideo sharing platforms, our work focuses on hate video de-tection using multi-modalities. To this end, we curate∼43hours of videos from BitChute and manually annotate themas hate or non-hate, along with the frame spans which couldexplain the labelling decision. To collect the relevant videoswe harnessed search keywords from hate lexicons. We ob-serve various cues in images and audio of hateful videos. Fur-ther, we build deep learning multi-modal models to classifythe hate videos and observe that using all the modalities ofthe videos improves the overall hate speech detection perfor-mance (accuracy=0.798, macro F1-score=0.790) by∼5.7%compared to the best uni-modal model in terms of macro F1score. In summary, our work takes the first step toward under-standing and modeling hateful videos on video hosting plat-forms such as BitChute.